On Securing Model Supply Chains

Remember PoisonGPT? The "four steps to poison LLM supply chain" post went viral on HN last week. They took a popular existing open-source language model (GPT-J-6B), fine-tuned it with ROME (a clever technique which treats the LLM's weights as a giant key-value store of facts, and "inserts" a new fact), and then re-uploaded it with a slight typo in the org name.

The post didn't elaborate on how successful this approach was, but package managers are all too familiar with "typo squatting" and how effective it is across all languages.

This brings up the fascinating question of what ML model supply chain security even means. While the Chainguards of the world have been hard at work on reproducible builds & SBOMs for containers, we've been happily shipping increasingly powerful derivations of open-source models without much thought on their provenance. Even more fundamentally, what does it even mean to consider the provenance of a model? How can we adapt current supply chain security techniques for models? My goal today is to explore that.

Since I think best in code, I did my exploration while building safemodels, a proof-of-concept Python library that extends HuggingFace's safetensors and supports signing & verifying model origins.

How does this work with code?

The basic question developers have learned to have about their code is "where did this come from?". From avoiding GPL-like licenses to avoiding another left-pad, there's a growing movement to bake verifiable certificates of origin to blobs of code. For some projects, it's simply a matter of enforcing signed git commits. Others go whole-hog with OSS solutions like sigstore, which can tell you exactly where that code came from and if it's been modified.

This last approach is particularly interesting for us. High-level, this is how it works when you're signing, say, a Docker image:

- Generate an ephemeral public/private keypair

- Generate an OpenID Connect token that proves who you are

- Trade that token for a short-lived signing certificate bound to your public key

- Sign the image with the private key, then discard it

- Upload the digest & certificate to an auditable, immutable, append-only ledger

- Staple the signature and certificate to the image

Steps 2 and 3 are handled by a dedicated public CA. A public instance of the ledger in Step 5 is provided by the Linux Foundation. This system requires a base level of trust in LF (sorry decentralized bros), but it's overall pretty solid.

To verify the signature of some code, you follow a simple chain of trust:

- Verify the signature with the public key embedded in the certificate ✅

- Verify the source with the identity on the certificate ✅

- Verify the certificate with the CA ✅

- Verify the contents by checking the digest in the ledger ✅

If the certificate or public key are modified, the verification with the CA will fail. If the signature is modified, the verification via the certificate will fail. If the contents are changed, the digest will not matched the signed value in the immutable ledger. Success!

Now, what's stopping us from using this with ML models...

Easy Mode: Where did this model come from?

Of course, we can simply s/image/model/g above and have a workable solution for certifying the origin of models. The only real issues would be figuring out how to hash the model, how to sign the hash, and where to tack on that signature.

Model Digest

Hashing the model turns out to be pretty easy to solve. Though there are numerous ways to serialize them, at the end of the day, a model is simply an architecture + a set of weights. My implementation in safemodels simply uses xxhash (a fast, non-cryptographic hash) to create a digest of the weights, using the linearized version of each weight vector (after sorting them by name). You can then use a strong, cryptographic hash to combine the weight digest and a canonical description of the architecture.

safemodels now uses blake2b for securely hashing the weights. Thanks to @antimatter15 for pointing out that xxhash is vulnerable to second preimage attacks.from safemodels import safe_hash

from huggingface_hub import hf_hub_download as dl

st = dl("gpt2", filename="model.safetensors")

pt = dl("gpt2", filename="pytorch_model.bin")

assert safe_hash(st) == safe_hash(pt) == 11799646609665420805Signed Digest

I build a small Python wrapper around cosign, the reference implementation of sigstore. It will pop open your browser to OAuth into whatever identity you wish to use for signing.

from safemodels import Cosign

good_hash = "11799646609665420805"

bad_hash = "00000000000000000000"

sig = cosign.sign(good_hash)

assert cosign.verify(good_hash, sig) is True

assert cosign.verify(bad_hash, sig) is FalseStapling the Signature

Luckily, safetensors already have a metadata header (as JSON!) in their protocol.

from safemodels.utils.safetensors import extract_metadata, update_meta

from huggingface_hub import hf_hub_download as dl

st = dl("gpt2", filename="model.safetensors")

print(extract_metadata(st))

# {'format': 'pt'}

update_meta(st, {"hello": "world"})

print(extract_metadata(st))

# {'format': 'pt', 'hello': 'world'}We can simply staple the signature to the metadata, which makes it available for safemodel-aware clients, while retaining backwards compatibility for all else.

from safemodels import SafeModel

from huggingface_hub import hf_hub_download as dl

st = dl("gpt2", filename="model.safetensors")

sm = SafeModel.from_safetensor(st)

# or

st, sm = SafeModel.from_hf("gpt2", version="main")

sm.sign_safetensor(st) # backwards-compatible rewrite of fileThere we have it! A safetensors-compatible, opt-in implementation of model signing, à la sigstore, in a tiny Python library.

Runtime Verification

To demonstrate the value of safemodels, you can monkey-patch huggingface_hub or safetensors to add additional constraints. For example, we can ensure that we're only loading models from organizations we trust (which would have prevented the PoisonGPT attack), or just that models have not been tampered with.

We'll start by downloading a small model, and seeing what happens if we try to load an unsigned model.

>>> from safemodels import install, SafeModel

>>> install()

>>> from safetensors import safe_open

>>> st, sm = SafeModel.from_hf(name="hustvl/yolos-tiny", version="main")

>>> safe_open(st, framework="pt")

safemodels/check.py:23: UserWarning: Loaded a safetensor without a hash.

<builtins.safe_open object at 0x15d8976f0>

>>>Now let's try signing it with my personal GitHub (subject: [email protected], issuer: https://github.com/login/oauth). When we open this version without any constraints, we get some feedback that verification passed.

>>> sm.sign_safetensor(st)

>>> safe_open(st, framework="pt")

211it [00:00, 4968.10it/s]

Verified OK

<builtins.safe_open object at 0x15d8976f0>If we decide, however, that we only want to allow models from EleutherAI, this won't work.

>>> from safemodels import init, Issuer

>>> init(Issuer(identity="EleutherAI", issuer="https://auth.huggingface.com")

>>> safe_open(st, framework="pt", throw=True)

211it [00:00, 4785.46it/s]

Error: none of the expected identities matched what was in the certificate,

got subjects [[email protected]] with issuer https://github.com/login/oauth

Traceback (most recent call last):

...

safemodels.InvalidSignature: Loaded a safetensor with an invalid signature!And of course, this works for typos as well. Now we only have one place we have to check for correct spellings. Let's assume the signed safetensor has been uploaded.

>>> from huggingface_hub import hf_hub_download

>>> hf_hub_download("EleuterAI/gpt-j-6B", filename="model.safetensors")

Downloading model.safetensors: 100%|███| 548M/548M [00:14<00:00, 39.2MB/s]

211it [00:00, 4785.46it/s]

Error: none of the expected identities matched what was in the certificate,

got subjects [EleuterAI] with issuer https://auth.huggingface.com

Traceback (most recent call last):

...

safemodels.InvalidSignature: Loaded a safetensor with an invalid signature!Hard Mode: Derived Models

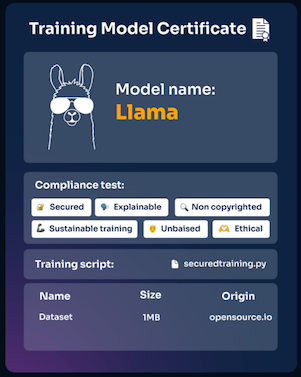

We may have solved the problem of identifying the source of models, but what happens when people start fine-tuning? Anyone could claim to start with GPT-J, change the weights, sign it, and ship it. Sure, we could verify that the fine-tuner indeed is the one publishing the new model, but there's still no way to attest to the provenance.

There are a few ways to go about solving this. My favorite is using oracles provided by a competitive market that can attest, for example, that this model has not been tuned with ROME. Or that is passes certain benchmarks. EleutherAI could start co-signing models that pass lm-evaluation-harness, in a similar way to how Apple notarizes software that's been scanned and blessed to run on macOS.

This was as far as my experiment went. I built a play oracle in safemodels that simply stores well-known hashes of trusted fine-tunes in a repo, which is synced brew-style. It populates itself as you use safemodels, and you can open PRs to add new hashes.

There are some commercial alternatives being built, and I'd love to learn more about how people are solving this problem! Play around with my demos (pip install safemodels), and reach out if you'd like to chat.

Member discussion